THE ECONOMIST.

A cautionary tale about the promises of modern brain science

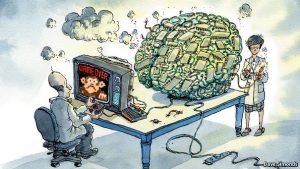

But a paper just published in PLOS Computational Biology questions whether more information is the same thing as more understanding. It does so by way of neuroscience’s favourite analogy: comparing the brain to a computer. Like brains, computers process information by shuffling electricity around complicated circuits. Unlike the workings of brains, though, those of computers are understood on every level.

Eric Jonas of the University of California, Berkeley, and Konrad Kording of Northwestern University, in Chicago, who both have backgrounds in neuroscience and electronic engineering, reasoned that a computer was therefore a good way to test the analytical toolkit used by modern neuroscience. Their idea was to see whether applying those techniques to a microprocessor produced information that matched what they already knew to be true about how the chip works.

Their test subject was the MOS Technology 6502, first produced in 1975 and famous for powering, among other things, early Atari, Apple and Commodore computers. With just 3,510 transistors, the 6502 is simple enough for enthusiasts to have created a simulation that can model the electrical state of every transistor, and the voltage on every one of the thousands of wires connecting those transistors to each other, as the virtual chip runs a particular program. That simulation produces about 1.5 gigabytes of data a second—a large amount, but well within the capabilities of the algorithms currently employed to probe the mysteries of biological brains.

The chips are down

One common tactic in brain science is to compare damaged brains with healthy ones. If damage to part of the brain causes predictable changes in behaviour, then researchers can infer what that part of the brain does. In rats, for instance, damaging the hippocampuses—two small, banana-shaped structures buried towards the bottom of the brain—reliably interferes with the creatures’ ability to recognise objects.

When applied to the chip, though, that method turned up some interesting false positives. The researchers found, for instance, that disabling one particular group of transistors prevented the chip from running the boot-up sequence of “Donkey Kong”—the Nintendo game that introduced Mario the plumber to the world—while preserving its ability to run other games. But it would be a mistake, Dr Jonas points out, to conclude that those transistors were thus uniquely responsible for “Donkey Kong”. The truth is more subtle. They are instead part of a circuit which implements a much more basic computing function that is crucial for loading one piece of software, but not some others.

The researchers also analysed the chip’s wiring diagram, something biologists would call its connectome. Feeding this into analytical algorithms yielded lots of superficially impressive data that hinted at the presence of some of the structures which the researchers knew were present within the chip. On closer inspection, though, little of it turned out to be useful. The patterns were a mishmash of unrelated structures that were as misleading as they were illuminating. This fits with the frustrating experience of real neuroscience. Researchers have had a complete connectome of a tiny worm, Caenorhabditis elegans, which has just 302 nerve cells, since 1986. Yet they understand much less about how the creature’s “brain” works than they do about computer chips with millions of times as many components.

The essential problem, says Dr Jonas, is that the neuroscience techniques failed to find many chip structures that the researchers knew were there, and which are vital for comprehending what is actually going on in it. Chips are made from transistors, which are tiny electronic switches. These are organised into logic gates, which implement simple logical operations. Those gates, in turn, are organised into structures such as adders (which do exactly what their name suggests). An arithmetic logic unit might contain several adders. And so on.

But inferring the existence of such high-level structures—working out exactly how the mess of electrical currents within the chip gives rise to a cartoon ape throwing barrels at a plumber—is difficult. That is not a problem unique to neuroscience. Dr Jonas draws a comparison with the Human Genome Project, the heroic effort to sequence a complete human genome that finished in 2003. The hope was that this would provide insights into everything from cancer to ageing. But it has proved much more difficult than expected to extract those sorts of revelations from what is, ultimately, just a long string of text written in the four letters of the genetic code.

Things were not entirely hopeless. The researchers’ algorithms did, for instance, detect the master clock signal, which co-ordinates the operations of different parts of the chip. And some neuroscientists have criticised the paper, arguing that the analogy between chips and brains is not so close that techniques for analysing one should automatically work on the other.

Gaël Varoquaux, a machine-learning specialist at the Institute for Research in Computer Science and Automation, in France, says that the 6502 in particular is about as different from a brain as it could be. Such primitive chips process information sequentially. Brains (and modern microprocessors) juggle many computations at once. And he points out that, for all its limitations, neuroscience has made real progress. The ins-and-outs of parts of the visual system, for instance, such as how it categorises features like lines and shapes, are reasonably well understood.

Dr Jonas acknowledges both points. “I don’t want to claim that neuroscience has accomplished nothing!” he says. Instead, he goes back to the analogy with the Human Genome Project. The data it generated, and the reams of extra information churned out by modern, far more capable gene-sequencers, have certainly been useful. But hype-fuelled hopes of an immediate leap in understanding were dashed. Obtaining data is one thing. Working out what they are saying is another.